Ask Global Fishing Watch

OPTICAL SATELLITE IMAGERY

Zihan Wei, Geospatial data scientist

How does optical satellite imagery help our efforts to map human activity at sea?

Optical satellite imagery resembles what we see with our eyes by capturing reflected sunlight in the visible and near-visible parts of the spectrum. It contains rich and diverse information, such as the color, shape and texture of imaged objects, which can make it a crucial source of data for mapping human activities at sea.

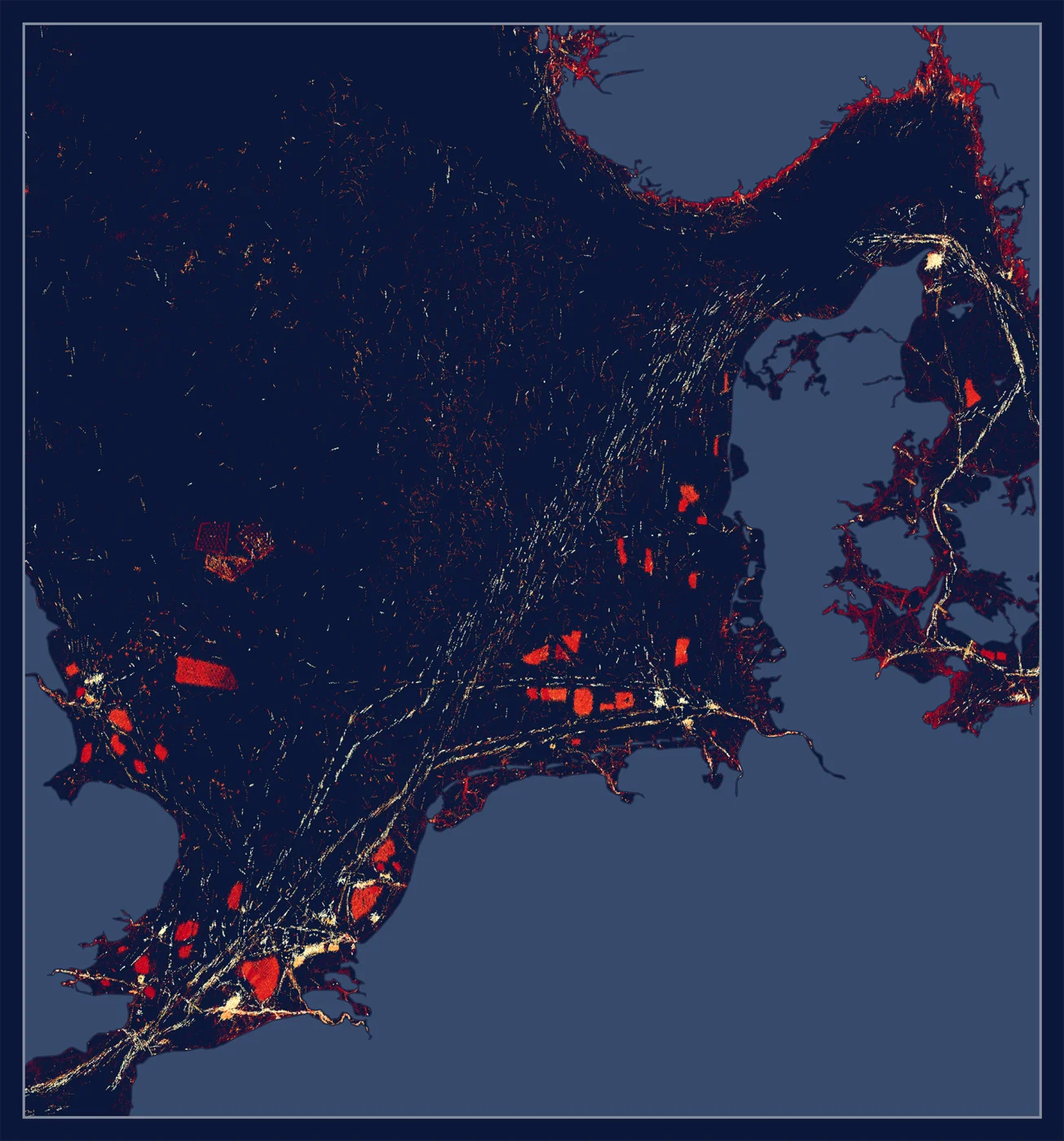

At Global Fishing Watch we analyze medium- and high-resolution optical satellite imagery with consistent coverage of global vessel activity over time. Currently, we are processing two powerful and complementary sources of imagery at global scale: 10-meter resolution Sentinel-2 imagery, which is publicly available from the European Space Agency’s Sentinel-2 mission, and 3-meter resolution PlanetScope imagery purchased from Planet Labs. Sentinel-2 imagery provides regular and extensive coverage of the waters over the global continental shelf where the majority of human activity at sea takes place, while Planet imagery draws a complete picture of near-shore activities that may be largely missed by Sentinel-2, such as small-scale fishing by vessels under 12 meters in length.

Optical satellite imagery consists of multiple bands that represent different wavelengths within the electromagnetic spectrum. Objects like vessels and aquaculture facilities, as well as features on the water like the wake of moving vessels, may stand out differently in different bands. Therefore, our detection models use multiple imagery bands to leverage their unique information and optimize detectability. For Sentinel-2, we use the 10-meter resolution blue, green, red and near-infrared (NIR) bands. For Planet, we use all of the eight available bands: coastal blue, blue, green I, green, yellow, red, red edge and NIR.

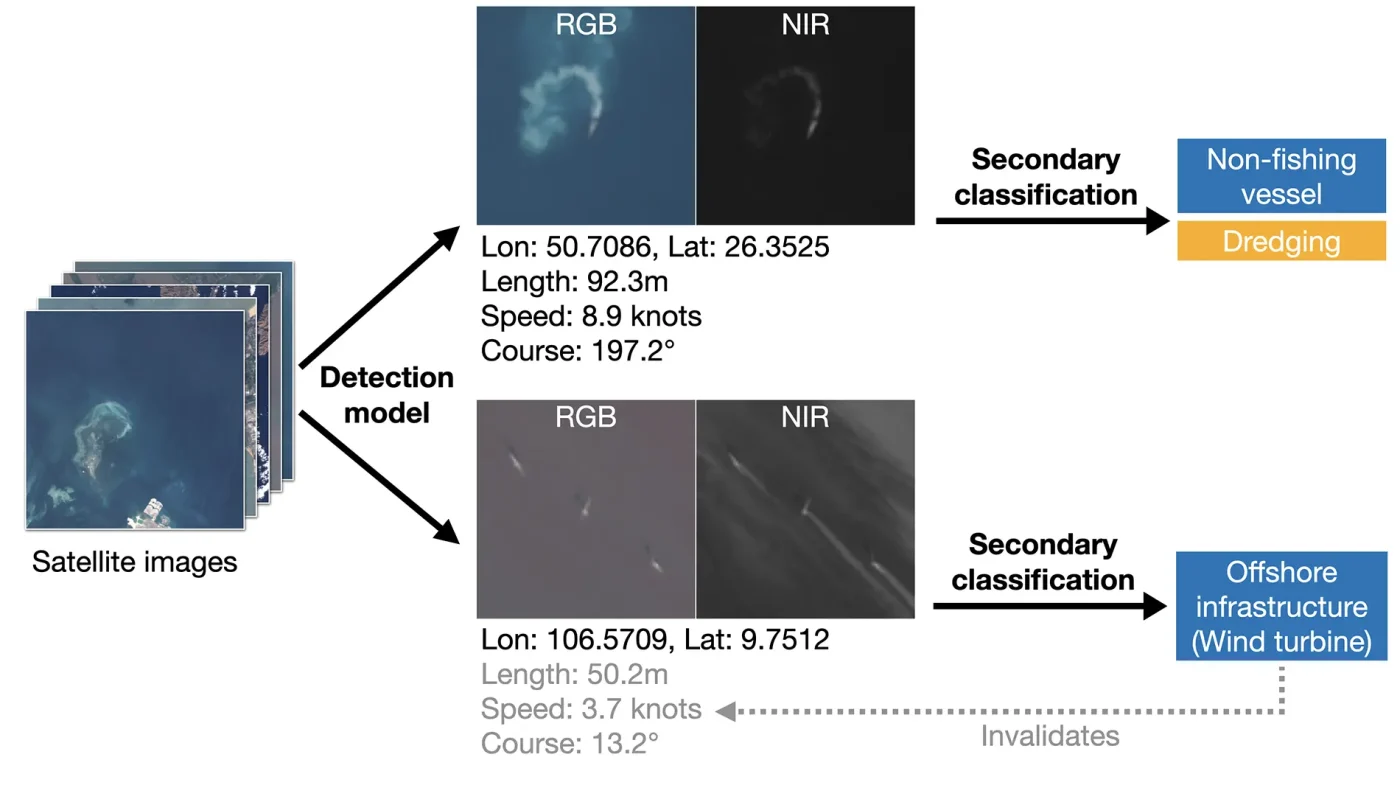

Our object detection and classification framework with optical satellite imagery involves two stages. The first stage detects vessels, and potentially other structures, through a deep-learning model customized for each imagery source. The output of the detection stage includes the center location of the detected objects and their inferred lengths, speeds and heading directions. We also store a small thumbnail image for each detection that contains the detected object at the center of its surroundings.

The detection stage forms the basis of mapping human activities at sea. The second stage focuses on classifying the detections by aggregating information about the detected objects and their surroundings. We have developed a variety of dedicated deep-learning models that incorporate thumbnail images, inferred physical properties of the detected vessels and environmental information around the detections. We use these models to classify the type of objects, such as fishing or non-fishing vessels, wind turbines, oil platforms and aquaculture facilities, as well as the type of activities, such as bottom trawling, dredging or vessel encounters. We create training data for these secondary classification models using detections matched to vessels on the automatic identification system (AIS) of which the vessel type and activities are known, as well as from image labeling of thousands of detections.

The classification stage is also key for removing noise from detections. To produce datasets of vessel detections, we need to remove non-vessel structures like wind turbines and oil rigs. In addition, many objects unrelated to human activities can stand out in optical imagery and be captured by the detection model, such as rocks, coral reefs, wave crests, clouds and sea ice. Therefore, we train our secondary classification models to identify these objects too, based on the thumbnail images.

We are able to map the presence and activity of publicly tracked vessels globally using AIS data, and reveal “hidden” industrial vessels and offshore infrastructures at sea using satellite synthetic aperture radar (SAR) imagery. Now, Global Fishing Watch is breaking new ground again by using optical satellite imagery to identify human activities and smaller vessels that are not visible in SAR imagery. This object detection and classification work has been a huge step forward from mapping vessel presence to revealing specific activities, and is a key piece of our work to compile a complete picture of all human activities at sea.